In medicine, the "Second Opinion" is a sacred concept. When a case is complex, ambiguous, or high-stakes, a doctor asks a colleague. We present the case at morning rounds. We text a specialist friend. We convene tumor boards.

We do this because we know that medicine is an imperfect science and that human cognition—no matter how brilliant—is subject to fatigue, bias, and gaps in knowledge.

But what happens at 3:00 AM in a rural clinic when there are no colleagues to ask? What happens when a primary care physician is faced with a constellation of symptoms that belongs to a rare disease they haven’t seen since medical school?

This is where Medical AI, specifically advanced models like Med-PaLM, accessible via dr7.ai, changes the paradigm. We are moving from the era of "AI as a Tool" to "AI as a Colleague."

Here is how specialized Large Language Models (LLMs) are enhancing clinical reasoning and acting as the ultimate safety net for modern healthcare.

The Burden of the "Lonely Decision"

According to the Society to Improve Diagnosis in Medicine, diagnostic errors affect an estimated 12 million Americans each year. These aren't usually caused by incompetence; they are caused by cognitive overload.

The sheer volume of medical knowledge is exploding. A 2011 study estimated that medical knowledge doubles every 73 days. It is humanly impossible for a physician to stay current on every new paper, every new drug interaction, and every rare genetic marker.

When a doctor misses a diagnosis, it is often due to Anchoring Bias—latching onto the first symptom and ignoring contradictory evidence—or Availability Heuristic—diagnosing what is common rather than what is present.

This is where AI shines. It doesn't get tired. It doesn't have an ego. And it doesn't forget a paper published last week.

Med-PaLM: Beyond the Search Engine

It is important to distinguish between "Googling a symptom" and "Consulting Med-PaLM."

When you use a search engine, you are retrieving documents. You get a list of links that you must read, synthesize, and filter for relevance.

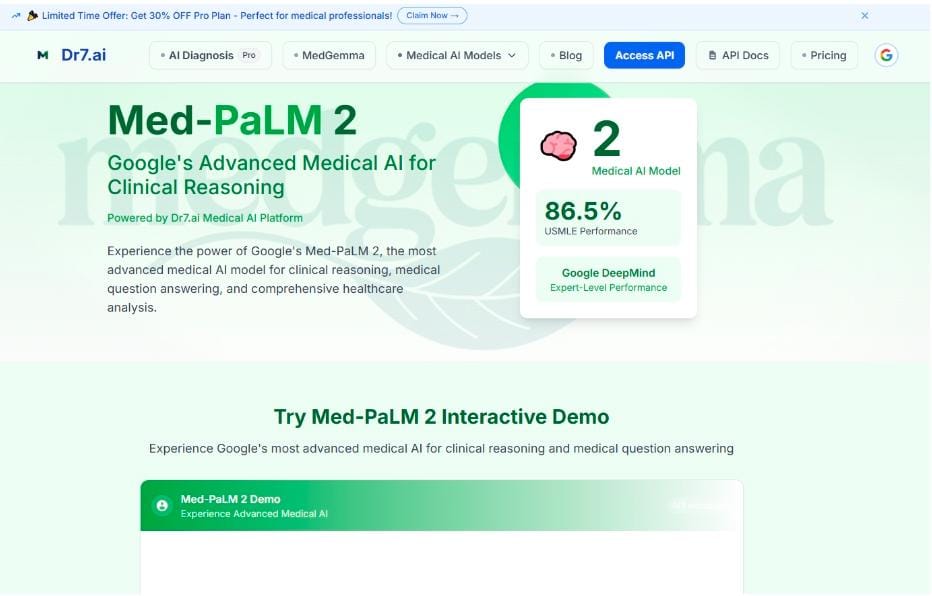

Med-PaLM (Google's specialized medical model) performs Clinical Reasoning. It doesn't just match keywords; it mimics the deductive process of a clinician. When tested on the U.S. Medical Licensing Examination (USMLE), Med-PaLM 2 performed at an "expert" physician level, achieving over 85% accuracy.

Through the Dr7.ai ecosystem, this capability is now accessible not just to research hospitals, but to any clinician. Here is how it functions as a "Second Opinion" in three distinct workflows.

1. The Differential Diagnosis Generator

Imagine a patient presents with fatigue, mild joint pain, and non-specific abdominal discomfort. A busy GP might diagnose a viral infection or IBS (Irritable Bowel Syndrome) and send them home.

However, an AI model, unrestricted by the "most likely" bias, can instantly scan the entire ontology of human disease.

The Workflow: A doctor inputs the symptoms and lab values into the Dr7.ai interface (securely and anonymously) and asks: "Generate a differential diagnosis, ranked by probability, but include rare etiologies that must be ruled out."

The AI might suggest:

-

Viral Gastroenteritis (Common)

-

IBS (Common)

-

Whipple's Disease (Rare)

-

Hemochromatosis (Genetic)

The doctor might have forgotten about Whipple's Disease. But seeing it on the list triggers a memory: "Wait, did I check for malabsorption?" The AI prompts the human to look closer. It doesn't make the decision, but it widens the aperture of possibility.

2. The "Devil’s Advocate" (Red Teaming)

One of the most powerful ways to use AI is to ask it to prove you wrong. In high-stakes industries like aerospace or intelligence, this is called "Red Teaming."

The Workflow: A clinician thinks they have the right diagnosis. They can input their conclusion into the model: "Patient is a 50-year-old male with chest pain. EKG is normal. I suspect acid reflux. Tell me why I might be wrong. What am I missing?"

The AI, trained on millions of case studies, might reply: "Consider Aortic Dissection. While rare, a normal EKG does not rule it out, and the pain description (tearing sensation) aligns. Also consider Pulmonary Embolism if tachycardia is present."

This forces the physician to pause and reconsider. It breaks the "Anchoring Bias." It acts as the annoying but necessary colleague who asks, "Are you sure?"

3. The Translator: Simplifying Patient Communication

Clinical reasoning isn't just about figuring out what's wrong; it's about explaining it to the patient. Compliance with treatment is low when patients don't understand their condition.

Doctors often struggle to break down complex "Medicalese" into layperson terms.

The Workflow: After diagnosing a patient with Atrial Fibrillation, a doctor can ask the AI: "Explain Atrial Fibrillation and the need for anticoagulants to a 70-year-old patient with low health literacy. Use an analogy involving plumbing."

The model generates a script comparing the heart's electrical signals to a "glitchy fuse box" and the blood clots to "sludge in a slow-moving pipe." This empowers the doctor to communicate more effectively, improving patient outcomes.

Trust, But Verify: The "Resident" Metaphor

The biggest barrier to adoption is fear. Doctors fear liability. "What if the AI is wrong?"

The best way to integrate these models is to treat them like a highly intelligent Medical Resident.

-

A resident is brilliant and has read all the textbooks.

-

But a resident lacks experience and can make mistakes.

-

As the Attending Physician, you sign the note. You make the final call.

You trust the resident to do the legwork, summarize the labs, and suggest a plan, but you verify every critical data point.

Platforms like Dr7.ai facilitate this relationship by providing access to models that cite their sources. When Med-PaLM suggests a diagnosis, it draws from established medical consensus. This transparency allows the "Attending" (the human doctor) to audit the AI's logic.

The Future of the Consult

We are heading toward a future of "Centaur" Medicine—half human, half machine.

Studies have consistently shown that AI alone is good, and Human alone is good, but Human + AI is superior to both.

-

The AI brings the raw processing power of millions of medical journals.

-

The Human brings empathy, intuition, physical examination skills, and ethical judgment.

The "Second Opinion" is no longer a luxury reserved for teaching hospitals. With the unified access provided by Dr7.ai, every clinician—whether in a bustling city center or a remote clinic—can have a panel of world-class experts sitting virtually on their shoulder.

The question for medical professionals is no longer "Will AI replace me?" It is: "How much better can I be at my job when I have infinite knowledge at my fingertips?"

Media Contact

Company Name: Dr7.ai

Email:Send Email

Country: United States

Website: https://dr7.ai/